Generation AI: Teaching a new kind of tech savvy

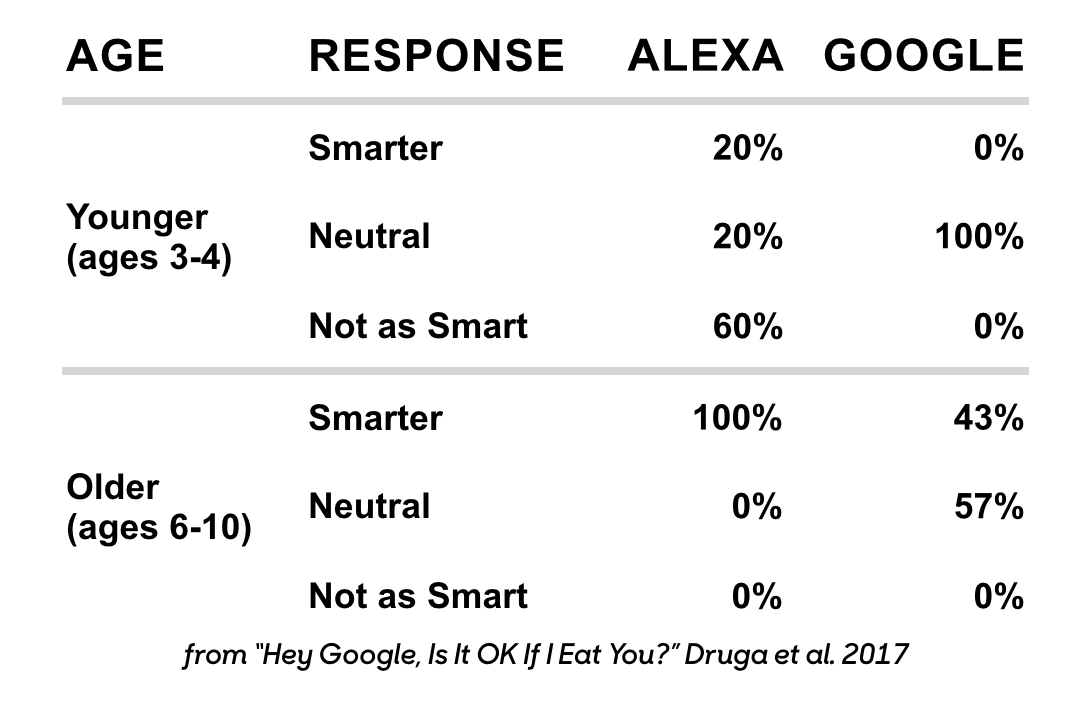

In 2017, my colleagues in the Personal Robots group ran a study. Children, ages 6-10 years of age, were invited to the Media Lab to play with various smart devices (think: Amazon Alexa or Google Home). After the kids had an opportunity to play and interact with these devices, the researchers asked them each the following question: do you think the device is smarter, just as smart, or less smart than you? Here were the responses:

Findings from “Hey Google, Is It OK If I Eat You? ” Druga et al. 2017. Credit:Randi Williams

My labmate Randi likes to tell a story about one of the kids in the study. A young girl asked, “Alexa, what do sloths eat?”

In a synthetic voice, the Amazon Alexa responded, “I’m sorry, I don’t know how to help you with that.”

“That’s okay!” the girl exclaimed. She walked over to another Amazon Echo, picked it up, and said, “I’ll see if the other Alexa knows.”

(l-r) Study helper Yumiko Murai, 6-year old Viella, and study co-author Stefania Druga chatting with Google Home about sloths. Credit: Randi Williams

(l-r) Study helper Yumiko Murai, 6-year old Viella, and study co-author Stefania Druga chatting with Google Home about sloths. Credit: Randi Williams

I love this story, but it also worries me. Children are naturally curious and are eager to use technology as a lens to make sense of the world around them. But the results of this study tell me one thing: our children place a ton of authority in technology while not clearly understanding how that technology works. And now we are in the era of AI, where children are growing up not just digital natives, but AI natives (as my advisor, Cynthia Breazeal, likes to say). And while this might be harmless in the case of searching for what sloths eat (mainly leaves and occasionally insects, in case you were wondering), I fear children placing this much authority in more pervasive algorithms—YouTube’s recommendation algorithm, for example.

In 2018, Zeynup Tufecki penned an article in The New York Times called YouTube, the Great Radicalizer where she recounts the experience of watching political rallies—from Donald Trump as well as Hillary Clinton and Bernie Sanders—on YouTube. It seemed, however, that after watching these videos—as any person seeking to be politically informed might—the YouTube recommendation algorithm paired with the “Autoplay” feature increasingly recommended to her conspiratorial content. It didn’t matter if she had watched a lot of left-leaning or right-leaning content, the algorithm bombarded her with videos denying the Holocaust or alleging that the US government had coordinated the September 11 attacks.

It’s this finding, in conjunction with the fact that YouTube is the most popular social media platform among teens and the fact that 70% of adults use YouTube to learn how to do new things or about news in the world, that makes me worried about the authority our children place in technology, and specifically in artificial intelligence.

So…how can we prepare our children to be conscientious consumers of technology in the era of AI? And can we empower them to be not only conscientious consumers of AI systems, but also conscientious designers of technology?

Empowering kids through AI + ethics education

These two questions are at the heart of my research at the Media Lab, where I seek to translate the theoretical findings of those who study artificial intelligence, design, and ethics to actionable teaching exercises.

The first pilot of my ethics + AI curriculum was a three-session workshop I ran last October at David E. Williams Middle School, where I was able to work with over 200 middle school students. During the three sessions, students learned the basics of deep learning, about algorithmic bias, and how to design AI systems with ethics in mind.

The goal of the course was to get students to see artificial intelligence systems as changeable, and give them the tools to effect change. The idea is that if we are able to see a system as something that is flexible, to know that it doesn’t have to be the way that it is, then the system holds less authority over us. The algorithm becomes, as Cathy O’Neil once put it, simply an opinion.

Getting students to see algorithms as changeable first meant getting students to see algorithms at all. They needed to become aware of the algorithms they interact with daily: Google Search or the facial recognition systems on SnapChat. Once students were able to identify algorithms in the world around them, they were then able to learn about how these systems work—for example, how does a neural net learn from a dataset of images?

I believe it’s essential to introduce ethics topics at this point in time, as soon as students have learned the basic technical material. At the collegiate level, an ethics lesson or “societal impact” lesson is often relegated to the last class of the semester, or to an independent course altogether. This is problematic for two reasons. First, it inadvertently teaches students of think of ethics as an afterthought, and not as fundamental to the algorithm building or design process. Second, instructors often run out of time! I think back to the many U.S. history classes I took during elementary, middle, and high school – my teachers never got past the Civil Rights Act of 1964. As an adult, I have a vague sense of what the Cold War was about, but couldn’t tell you the details the way I can tell you about the French Revolution. This is not the fate we want for ethics topics in technology classes. Ethics content has to be integrated with technical content.

For example, during the pilot students trained their own cat-dog classification algorithms. But there was a twist: the training dataset was biased. Cats were overrepresented and dogs were underrepresented in the training set, leading to a classifier that was able to classify cats with high accuracy, but often mislabeled dogs as cats. After seeing how the training data affected the classifier, students had the opportunity to re-curate their training set to make the classifier work equally for both dogs and cats.

Four middle school students learn how about supervised machine learning and classification. Credit: Justin Aglio

Four middle school students learn how about supervised machine learning and classification. Credit: Justin Aglio

Finally, the course concluded with a session on ethical design. Students were tasked to re-imagine YouTube’s recommendation algorithm by identifying stakeholders in the system and the values those stakeholders might have. What they produced was amazing.

To summer…and beyond

Two things became very clear during this pilot. First, students were much more capable of grappling with this material than I expected. Second, they needed more time to engage with the material. Three forty-five minute sessions was simply not enough time for them to produce projects they were proud of or to allow for them to explore the technology they were engaging with.

This summer, the Personal Robots group will be hosting a week-long summer workshop at the MIT Media Lab. This workshop will include many of the same topics from the pilot program— kids will learn how to train their own image classifiers and learn about ethical design processes, but the week-long format will allow us to expand on each of these topics so that students truly feel like experts in AI and ethics. Students will learn about other kinds of artificial intelligent systems, such as the K-nearest neighbor algorithm. They will also grow their ethical toolbox through speculative fiction creative writing workshops or role playing exercises. As we perfect the curriculum based on our observations and feedback, we plan to make it open source later this year.

The workshop will run from June 24-28 at the Media Lab in Cambridge, MA. There is a $150 fee for students to participate, which covers the cost of trained instructors from our collaborator EMPOW Studios and a t-shirt. You can find out more about the workshop and register your child for it here: https://empow.me/ai-ethics-summer-program-mit

This content has been reproduced under a Creative Commons Attribution 4.0 International license (CC BY 4.0).

To access the original article, please see here.

Popular

Policy

Economics

Jobs News

Provider

Workforce

Children’s Services Award changes finalised to address gender-based undervaluation

2025-12-12 06:58:10

by Fiona Alston

Provider

Workforce

Quality

Fair Work Commission confirms forced resignation grounds in case involving early learning provider

2025-12-08 07:30:23

by Fiona Alston

Workforce

Quality

Practice

Provider

Research

How one teacher is using Little J & Big Cuz to build empathy, understanding and confidence in First Nations learning

2025-12-08 07:15:19

by Fiona Alston